If the RankSense app detected the Robots.txt Blocking Important Pages SEO issue on your site, please follow these guidelines:

Issue Description:

The robots.txt file tells search engines which pages not to crawl/visit. Their correct use helps search engines crawl your site more efficiently. Unfortunately, if you use it incorrectly, you could prevent major search engines from finding valuable content to index. We detected pages that receive organic search clicks but are getting blocked in your robots.txt file.

The above description comes directly from the RankSense app. Essentially, it means that an important page on your site is currently blocked from being crawled by search engines. This block is being caused by the robots.txt file on the site.

An “important page” is defined by the RankSense app as a page that has previously generated organic search traffic. Meaning a user got to the page by clicking on a Search Engine Results Page.

If the page is now blocked from being crawled by search engines, these search engines will have a difficult time continuing to rank the page, since they will not be able to see the content on the page and know whether it has been updated or not.

How To Fix This SEO Issue:

We recommend removing robots.txt directives that block pages with organic search traffic.

The above description comes directly from the RankSense app. Essentially, it means that the directive, or instruction, on the robots.txt file that is blocking the page from being crawled needs to be removed.

For example, let’s imagine this is your site’s robots.txt file:

user-agent: *

Disallow: /*?FacetedNaviagion=*

Disallow: /InternalSearchResults=*

Disallow: /ImportantPage

The “Disallow” directives, or instructions, tell search engines not crawl pages on your site that fit the URL structure next to Disallow:

For example, search engines will not be able to crawl www.example.com/category-name?FacetedNaviagion=blue or www.example.com/InternalSearchResults=search-lookup

The Disallow: /*?FacetedNaviagion=* and Disallow: /InternalSearchResults=* directives are the instructions blocking those pages from being crawled.

This is a good thing though, as Faceted Navigation pages and Internal Search Results should not be crawled by search engines.

However, the problem comes with the Disallow: /ImportantPage directive, as www.example.com/ImportantPage should be crawled by search engines, but currently can not be because of the directive.

We can fix this easily by removing that directive.

So the updated robots.txt file should look like this:

user-agent: *

Disallow: /*?FacetedNaviagion=*

Disallow: /InternalSearchResults=*

This fixes our issue.

How To Fix This SEO Issue Through the RankSense App:

Not only can the RankSense app detect this SEO issue, but it can also easily fix it with the click of a couple buttons.

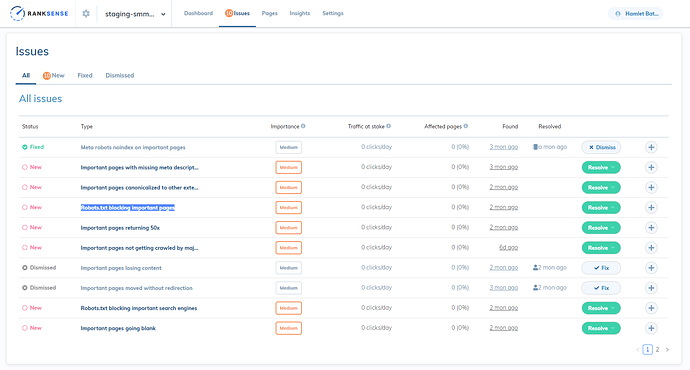

First, if the Robots.txt Blocking Important Pages SEO issue was detected on the site, it will be listed either on the main “Dashboard” under “Latest Issues”, or under the “Issues” tab in the top navigation.

The Status column will indicate whether this issue was detected recently or if it had been detected a while ago.

The Type column will state the SEO issue detected, in this case Robots.txt Blocking Important Pages.

The Importance column will show the severity of the issue. We rank issues by their severity and urgency. Issues more serious or affecting more popular pages are higher priority.

The Traffic at Stake column shows how many search clicks the pages with this issue generate per day.

The Affected Pages column shows how many pages have been detected with this issue.

The Found column lists when the issue was found.

The Resolved column will show when the issue was fixed by RankSense, if it was fixed.

*Note that these column headers are listed under the “Issues” tab view, but there are no headers under the “Latest Issues” view in the main “Dashboard”, though the data is there.

The Resolve button will give you the options to either Fix or Dismiss the issue. (More on this in a bit.)

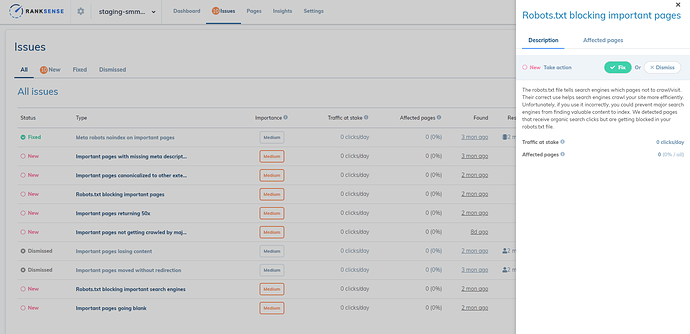

If you click on the + button, a pop up screen will appear with a description of the issue. This screen also shows the Fix and Dismiss options.

Clicking on “Affected Pages” will list the actual pages that were detected with the issue.

Clicking on Dismiss, from either view, will mark the issue as “Dismissed”, just in case this issue was set up like this for a specific purpose.

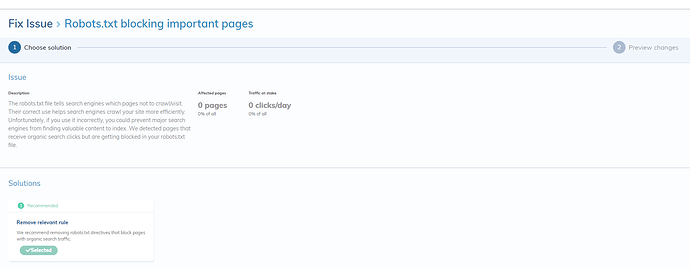

Clicking on Fix will take you to the “Fix Issue” page:

This page includes a “Solutions” section with the available options to fix the problem.

In this case the available solution will be:

Remove relevant rule

And you will be provided with a small description of our recommendation:

We recommend removing robots.txt directives that block pages with organic search traffic.

Next click on the “Continue” button at the bottom of the screen:

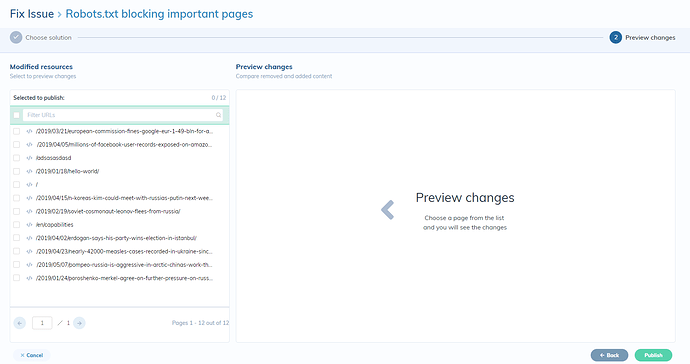

You will then be able to preview the SEO changes that you are about to make.

Clicking on “Publish” will implement this SEO fix directly on your site through our Cloudflare integration and the SEO issue will be resolved!

Note that implementing this fix through the RankSense app is meant as a way to quickly patch this issue while you find the time to implement the solution on the site directly, as removing the RankSense app from the site will remove the SEO fix as well.

However, RankSense provides you with the ability to quickly implement SEO fixes without developer time and more importantly, we are able to let you know which SEO fixes you implemented had the biggest impact on SEO. So you can spend costly developer time only on SEO fixes that provided great results for your site.

Please let us know if you have any questions.